|

Log in to post

|

Print Thread |

|

|

|

|

|

Band-in-a-Box for Windows

|

Joined: May 2002

Posts: 7,620

PG Music Staff

|

PG Music Staff

Joined: May 2002

Posts: 7,620 |

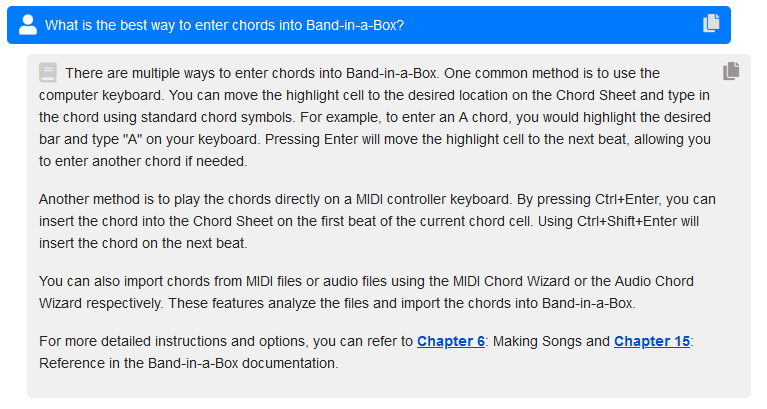

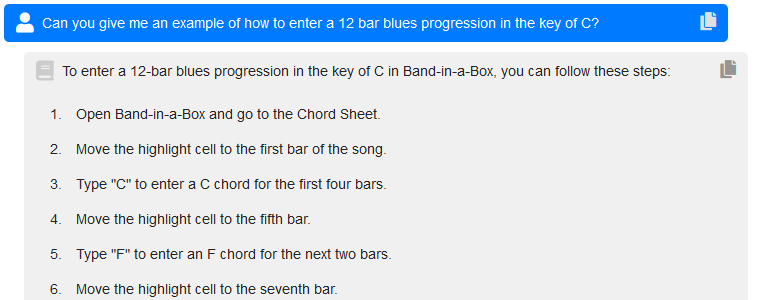

So what is with the AI instructions which are so off the mark? Is it just guessing? How can it be so intelligent and be so wrong? The answer was wrong but actually not too far off. In the Notation Window, you DO get the Insert and edit Section Text context menu items, and it works exactly like it described. It was wrong about the context menu item being in the chord sheet, although it WOULD make sense for us to have a menu item for the section text layer in the Chord Sheet's context menu. It is not clear why it was saying "depending on the software" since it should 'know' that it's answering the question in the context of the Band-in-a-Box software. To answer your question, it can be wrong for the same reasons that ChatGPT is often wrong since it uses language models developed by Open AI. All of this technology currently suffers from the very significant and poorly understood problem of hallucinations - sometimes generating false or nonsensical information that doesn't seem to fit with the data it was fed. You will have encountered this if you use ChatGPT for factual data (as opposed to creative writing for example) - answers that sound extremely confident but are inaccurate or even completely bogus. ( as an aside, if you think you haven't encountered this, just make sure to verify things you know nothing about with other sources... )We can improve the quality and amount of data it has about Band-in-a-Box, which should help reduce hallucinations. I asked it a few random questions about Band-in-a-Box and it did a decent job. I find that it can answer some questions correctly even if the answer is not in the data. For example, somehow it could generate an answer on entering a blues progression (not a perfect answer, but a correct one), even though that specific information is not in the docs.   ...

Last edited by Andrew - PG Music; 09/22/23 09:17 AM.

Andrew

PG Music Inc.

|

|

|

|

|

|

|

|

|

|

|

|

Ask sales and support questions about Band-in-a-Box using natural language.

ChatPG's knowledge base includes the full Band-in-a-Box User Manual and sales information from the website.

|

|

|

|

|

|

|

|

|

|

|

Band-in-a-Box® 2026 for Windows® Special Offers Extended Until January 15, 2026!

Good news! You still have time to upgrade to the latest version of Band-in-a-Box® for Windows® and save. Our Band-in-a-Box® 2026 for Windows® special now runs through January 15, 2025!

We've packed Band-in-a-Box® 2026 with major new features, enhancements, and an incredible lineup of new content! The program now sports a sleek, modern GUI redesign across the entire interface, including updated toolbars, refreshed windows, smoother workflows, a new dark mode option, and more. The brand-new side toolbar provides quicker access to key windows, while the new Multi-View feature lets you arrange multiple windows as layered panels without overlap, creating a flexible, clutter-free workspace. We have an amazing new “AI-Notes” feature. This transcribes polyphonic audio into MIDI so you can view it in notation or play it back as MIDI. You can process an entire track (all pitched instruments and drums) or focus on individual parts like drums, bass, guitars/piano, or vocals. There's an amazing collection of new content too, including 202 RealTracks, new RealStyles, MIDI SuperTracks, Instrumental Studies, “Songs with Vocals” Artist Performance Sets, Playable RealTracks Set 5, two RealDrums Stems sets, XPro Styles PAK 10, Xtra Styles PAK 21, and much more!

There are over 100 new features in Band-in-a-Box® 2026 for Windows®.

When you order purchase Band-in-a-Box® 2026 before 11:59 PM PST on January 15th, you'll also receive a Free Bonus PAK packed with exciting new add-ons.

Upgrade to Band-in-a-Box® 2026 for Windows® today! Check out the Band-in-a-Box® packages page for all the purchase options available.

Happy New Year!

Thank you for being part of the Band-in-a-Box® community.

Wishing you and yours a very happy 2026—Happy New Year from all of us at PG Music!

Season's Greetings!

Wishing everyone a happy, healthy holiday season—thanks for being part of our community!

The office will be closed for Christmas Day, but we will be back on Boxing Day (Dec 26th) at 6:00am PST.

Team PG

Band-in-a-Box 2026 Video: The Newly Designed Piano Roll Window

In this video, we explore the updated Piano Roll, complete with a modernized look and exciting new features. You’ll see new filtering options that make it easy to focus on specific note groups, smoother and more intuitive note entry and editing, and enhanced options for zooming, looping, and more.

Watch the video.

You can see all the 2026 videos on our forum!

Band-in-a-Box 2026 Video: AI Stems & Notes - split polyphonic audio into instruments and transcribe

This video demonstrates how to use the new AI-Notes feature together with the AI-Stems splitter, allowing you to select an audio file and have it separated into individual stems while transcribing each one to its own MIDI track. AI-Notes converts polyphonic audio—either full mixes or individual instruments—into MIDI that you can view in notation or play back instantly.

Watch the video.

You can see all the 2026 videos on our forum!

Bonus PAK and 49-PAK for Band-in-a-Box® 2026 for Windows®

With your version 2026 for Windows Pro, MegaPAK, UltraPAK, UltraPAK+, Audiophile Edition or PlusPAK purchase, we'll include a Bonus PAK full of great new Add-ons for FREE! Or upgrade to the 2026 49-PAK for only $49 to receive even more NEW Add-ons including 20 additional RealTracks!

These PAKs are loaded with additional add-ons to supercharge your Band-in-a-Box®!

This Free Bonus PAK includes:

- The 2026 RealCombos Booster PAK:

-For Pro customers, this includes 27 new RealTracks and 23 new RealStyles.

-For MegaPAK customers, this includes 25 new RealTracks and 23 new RealStyles.

-For UltraPAK customers, this includes 12 new RealStyles.

- MIDI Styles Set 92: Look Ma! More MIDI 15: Latin Jazz

- MIDI SuperTracks Set 46: Piano & Organ

- Instrumental Studies Set 24: Groovin' Blues Soloing

- Artist Performance Set 19: Songs with Vocals 9

- Playable RealTracks Set 5

- RealDrums Stems Set 9: Cool Brushes

- SynthMaster Sounds Set 1 (with audio demos)

- Android Band-in-a-Box® App (included)

Looking for more great add-ons, then upgrade to the 2026 49-PAK for just $49 and you'll get:

- 20 Bonus Unreleased RealTracks and RealDrums with 20 RealStyle.

- FLAC Files (lossless audio files) for the 20 Bonus Unreleased RealTracks and RealDrums

- MIDI Styles Set 93: Look Ma! More MIDI 16: SynthMaster

- MIDI SuperTracks Set 47: More SynthMaster

- Instrumental Studies 25 - Soul Jazz Guitar Soloing

- Artist Performance Set 20: Songs with Vocals 10

- RealDrums Stems Set 10: Groovin' Sticks

- SynthMaster Sounds & Styles Set 2 (sounds & styles with audio demos)

Learn more about the Bonus PAKs for Band-in-a-Box® 2026 for Windows®!

Video: New User Interface (GUI)

Join Tobin as he takes you on a tour of the new user interface in Band-in-a-Box® 2026 for Windows®! This modern GUI redesign offers a sleek new look with updated toolbars, refreshed windows, and a smoother workflow. The brand-new side toolbar puts track selection, the MultiPicker Library, and other essential tools right at your fingertips. Plus, our upgraded Multi-View lets you layer multiple windows without overlap, giving you a highly flexible workspace. Many windows—including Tracks, Piano Roll, and more—have been redesigned for improved usability and a cleaner, more intuitive interface, and more!

Watch the video.

You can see all the 2026 videos on our forum!

|

|

|

|

|

|

|

|

|

|

|

|

Forums57

Topics85,498

Posts792,689

Members39,903

| |

Most Online25,754

Jan 24th, 2025

|

|

|

|

|

|

|

|

|